Newer generation emulators are increasingly catering to the high end and almost demand it by virtue of them being based on much more recent videogame systems. While testing RetroArch and various libretro cores on our new high-end Windows desktop PC, we noticed that we could really take things up a few notches to see what we could get out of the hardware.

Dolphin

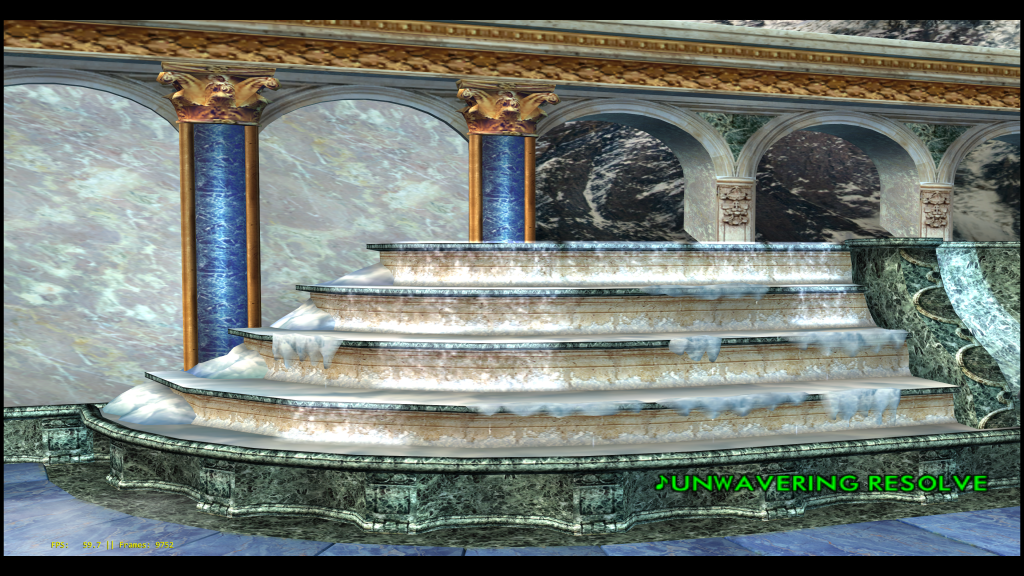

While working on the Dolphin libretro core some more, we stumbled upon the issue that internal resolution increases were still not working properly. So while fixing that in the latest core, we felt that the default scaled resolution choices that Dolphin provides (up to 8x native resolution) weren’t really putting any stress on our Windows development box (a Core i7 7700K equipped with a Titan XP).

So, in the process we added some additional resolution options so you can get up to 12K. The highest possible resolution right now is 19x (12160×10032).

As for performance results, even at the highest 19x resolution, the average framerate was still around 81fps, although there were some frame drops here and there and I found it to be generally more safe to dial the internal resolution down to a more conservative 12x or 15x instead). 12x resolution would be 8680×6336, which is still well over 8K resolution.

Note that the screenshots here are compressed and they are downscaled to 4K resolution, which is my desktop resolution. This desktop resolution in turn is an Nvidia DSR custom resolution, so it effectively is a 4K resolution downsampled to my 1080p monitor. From that, I am running RetroArch with the Dolphin core. With RetroArch, downscaling is pretty much implicit and works on the fly, so through setting the internal resolution of the EFB framebuffer, I can go beyond 4K (unlike most games which just query the available desktop resolutions).

We ran some performance tests on Soul Calibur 2 with an uncapped framerate. Test box is a Core i7 7700k with 16GB of DDR4 3000MHz RAM, and an Nvidia Titan XP video card. We start out with the base 8x (slightly above 4K Ultra HD) resolution which is the highest integer scaled resolution that Dolphin usually supports. If you want to go beyond that on regular Dolphin, you have to input a custom resolution. Instead, we made the native resolution scales go all the way up to 19x.

On the Nvidia Control panel, nearly everything is maxed out – 8x anti-aliasing, MFAA, 16x Anisotropic filtering, FXAA, etc.

| Resolution | Performance (with OpenGL) | Performance (with Vulkan) |

|---|---|---|

| 8x (5120×4224) [for 5K] | 166fps | 192fps |

| 9x (5760×4752) | 165fps | 192fps |

| 10x (6400×5280) | 164fps | 196fps |

| 11x (7040×5808) | 163fps | 197fps |

| 12x (7680×6336) [for 8K] | 161fps | 193fps |

| 13x (8320×6864) | 155fps | 193fps |

| 14x (8960×7392) | 152fps | 193fps |

| 15x (9600×7920) [for 9K] | 139fps | 193fps |

| 16x (10240×8448) [for 10K] | 126fps | 172fps |

| 17x (10880×8976) | 115fps | 152fps |

| 18x (11520×9504) [for 12K] | 102fps | 137fps |

| 19x (12160×10032) | 93.4fps | 123fps |

OpenLara

The OpenLara core was previously capped at 1440p (2560×1440). We have added available resolutions now of up to 16K.

| Resolution | Performance |

|---|---|

| 2560×1440 [for 1440p/2K] | 642fps |

| 3840×2160 [for 4K] | 551fps |

| 7680×4320 [for 8K] | 407fps |

| 15360×8640 [for 16K] | 191fps |

| 16000×9000 | 176fps |

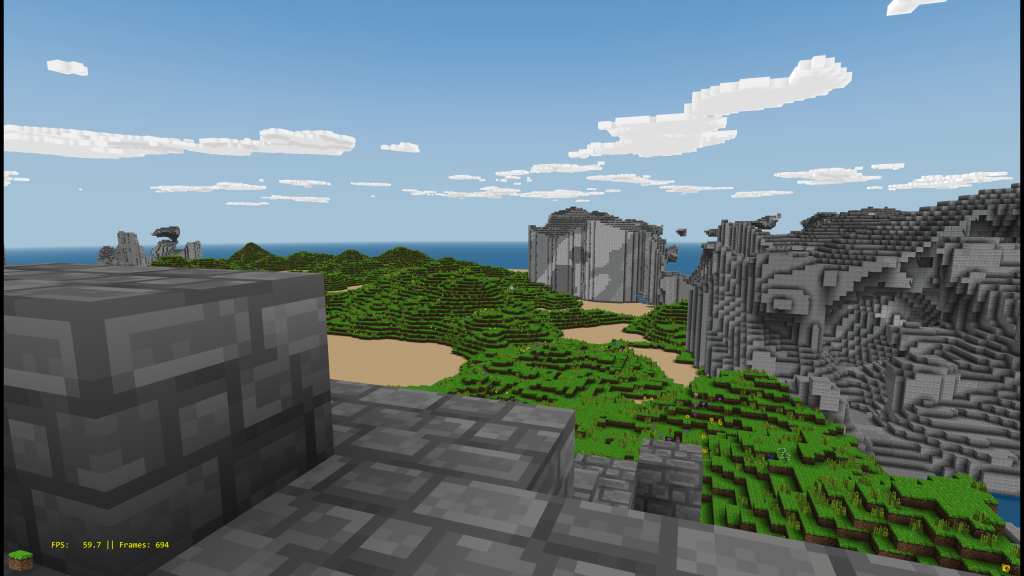

Craft

Previously, the Craft core supported only up to 1440p. Now it supports up to 16K and slightly higher.

For the Craft core, we are setting the ‘draw distance’ to 32, which is the highest available draw distance available to this core. With the draw distance set this far back, you can even see some pop-in right now (terrain that is not yet rendered and will only be rendered/shown when the viewer is closer in proximity to it).

| Resolution | Performance |

|---|---|

| 2560×1600 [for 1440p/2K] | 720fps |

| 3840×2160 [for 4K] | 646fps |

| 7680×4320 [for 8K] | 441fps |

| 15360×8640 [for 16K] | 190fps |

| 16000×9000 | 168fps |

Parallel N64 – Angrylion software renderer

So accurate software-based emulation of the N64 has remained an elusive pipe dream for decades. However, it seems things are finally changing now on high-end hardware.

This test was conducted on an Intel i7 7700K running at Boost Mode (4.80GHz). We are using both the OpenGL video driver and the Vulkan video driver for this test, and we are running the game Super Mario 64. The exact spot we are testing at it is at the Princess Peach castle courtyard.

Super Mario 64

| Description | Performance (with OpenGL) | Performance (with Vulkan) |

|---|---|---|

| Angrylion [no VI filter] | 73fps | 75fps |

| Angrylion [with VI filter] | 61fps | 63fps |

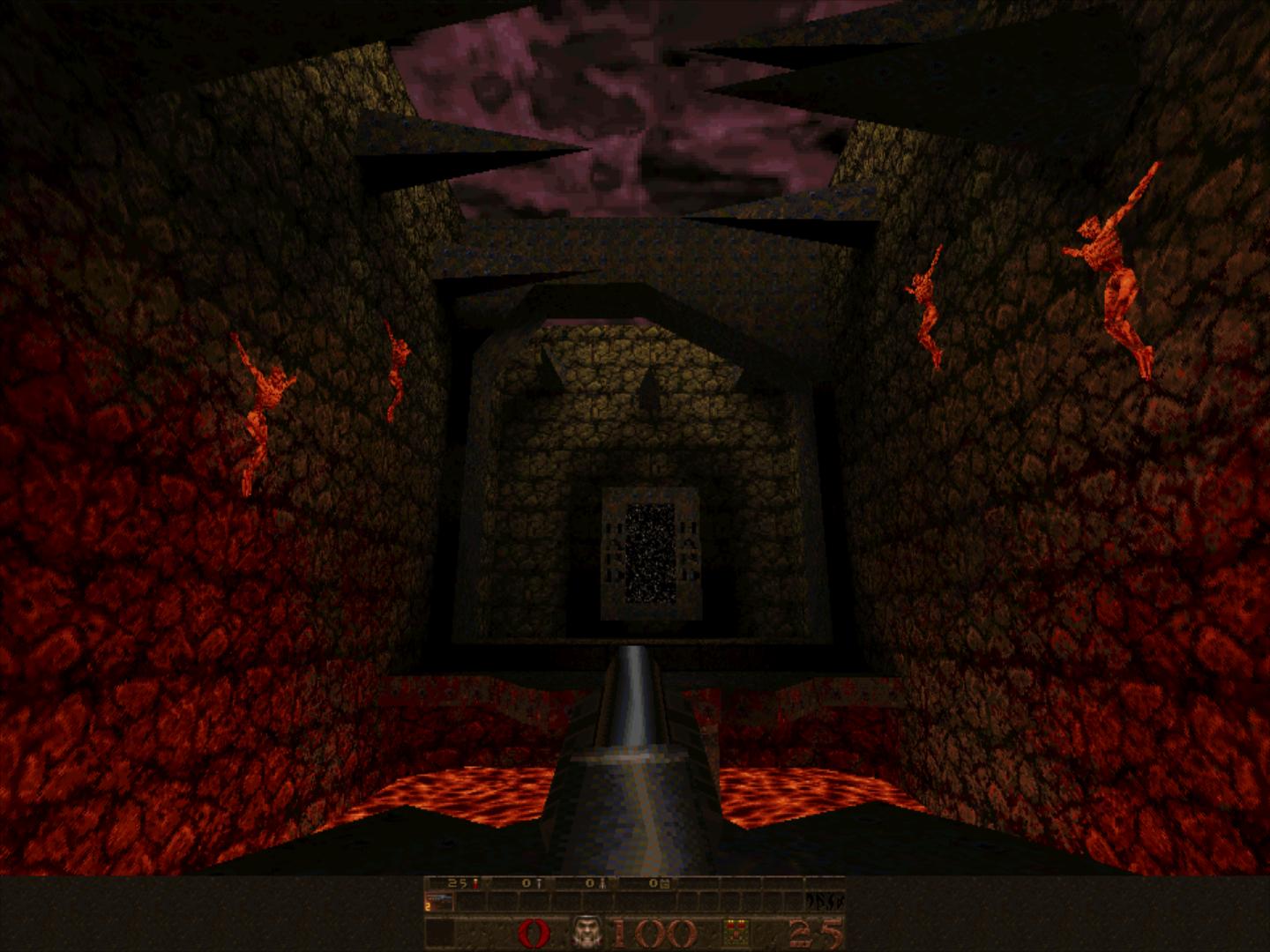

Quake 64

| Description | Performance (with OpenGL) | Performance (with Vulkan) |

|---|---|---|

| Angrylion [no VI filter] | 81fps | 82.5fps |

| Angrylion [with VI filter] | 68fps | 72fps |

Killer Instinct Gold

| Description | Performance (with OpenGL) | Performance (with Vulkan) |

|---|---|---|

| Angrylion [no VI filter] | 57.9fps | 58.7fps |

| Angrylion [with VI filter] | 54.6fps | 55fps |

GoldenEye 007

Tested at the Dam level – beginning

| Description | Performance (with OpenGL) | Performance (with Vulkan) |

|---|---|---|

| Angrylion [no VI filter] | 54.9fps | 43.8fps |

| Angrylion [with VI filter] | 45.6fps | 40.9fps |

Note that we are using the cxd4 RSP interpreter which, despite the SSE optimizations, would still be pretty slow compared to any RSP dynarec, so these results are impressive to say the least. There are games which dip more than this – for instance, Killer Instinct Gold can run at 48fps on the logo title screen, but on average, if you turn off VI filtering, most games should run at fullspeed with this configuration.

In case you didn’t notice already, Vulkan doesn’t really benefit us much when we do plain software rendering. We are talking maybe a conservative 3fps increase with VI filtering, and about 2fps or maybe even a bit less with VI turned off. Not much to brag about but it could help in case you barely get 60fps and you need a 2+ fps dip to avoid v-sync stutters.

Oddly enough, the sole exception to this is GoldenEye 007, where the tables are actually turned, and OpenGL actually leaps ahead of Vulkan quite significantly, conservatively by about 5fps with VI filter applied, and even higher with no VI filter. I tested this many times over to see if there was maybe a slight discrepancy going on, but I got the exact same results each and every time.

Parallel N64 – Parallel Vulkan renderer

So we have seen how software-based LLE RDP rendering runs. This puts all the workload on the CPU. So what if we reverse the situation and put it all on the GPU instead? That is essentially the promise of the Parallel Vulkan renderer. So let’s run the same tests on it.

This test was conducted on an Intel i7 7700K running at Boost Mode (4.80GHz). We are using the Vulkan video driver for this test, and we are running the game Super Mario 64. The exact spot we are testing at it is at the Princess Peach castle courtyard.

Super Mario 64

| Description | Performance |

|---|---|

| With synchronous RDP | 192fps |

| Without synchronous RDP | 222fps |

Quake 64

| Description | Performance |

|---|---|

| With synchronous RDP | 180fps |

| Without synchronous RDP | 220fps |

Killer Instinct Gold

| Description | Performance |

|---|---|

| With synchronous RDP | 174fps |

| Without synchronous RDP | 214fps |

GoldenEye 007

Tested at the Dam level – beginning

| Description | Performance |

|---|---|

| With synchronous RDP | 88fps |

| Without synchronous RDP | 118fps |

As you can see, performance nearly doubles when going from Angrylion to Parallel renderer with synchronous RDP enabled, and beyond with it disabled. Do note that asynchronous RDP is regarded as a hack and it can result in many framebuffer oriented glitches among other things, so it’s best to run with synchronous RDP for best results.

We are certain that by using the LLVM RSP dynarec, the performance difference between Angrylion and Parallel would widen even further. Even though there are still a few glitches and omissions in the Parallel renderer compared to Angrylion, it’s clear that there is a lot of promise to this approach of putting the RDP on the GPU.

Conclusion: It’s quite clear that even on a quad-core 4.8GHz i7 CPU, the CPU ‘nearly’ manages to run most games with Angrylion [software] at fullspeed but it doesn’t leave you with a lot of headroom really. Moving it to the GPU [through Parallel RDP] results in a doubling of performance with the conservative synchronous option enabled and even more if you decide to go with asynchronous mode (buggier but faster).

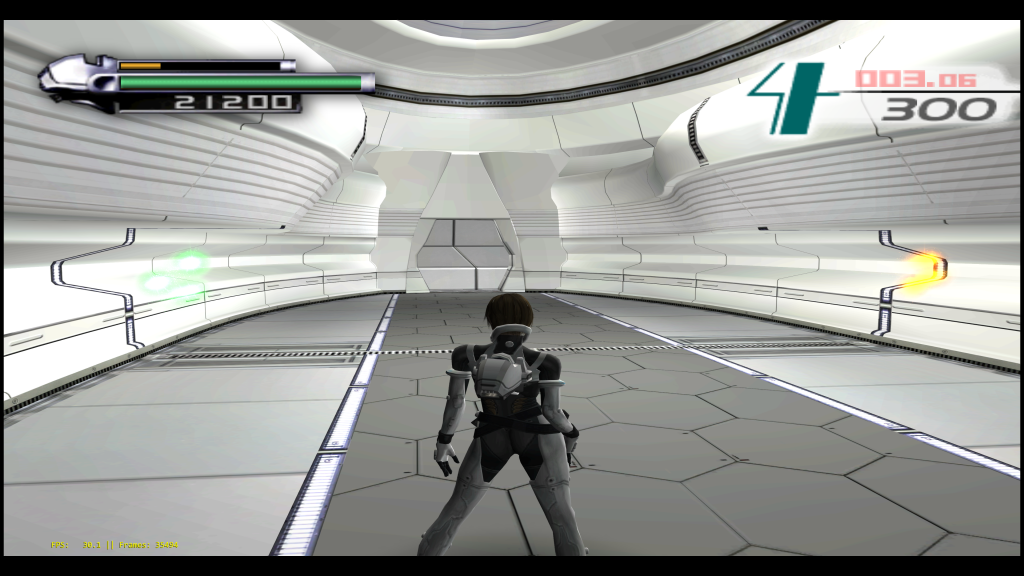

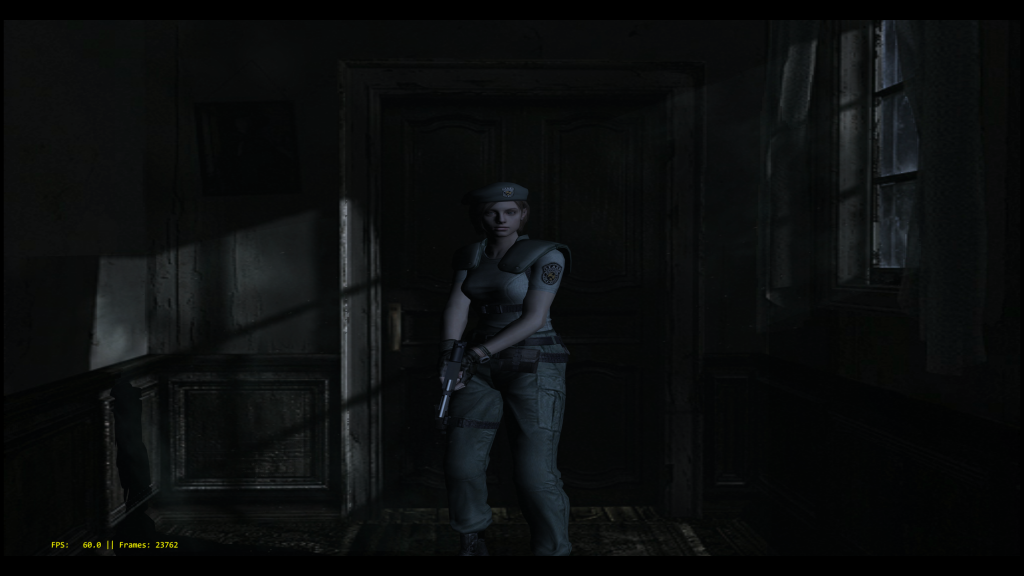

Beetle PSX

Previously, Beetle PSX would only provide internal resolution increases up to 8 times the original resolution. We have now extended this to 32 x for software and Vulkan, and 16x for OpenGL.

The results are surprising – while the Vulkan renderer is far more mature than the OpenGL renderer and implements the mask bit unlike the GL renderer (along with some other missing bits in the current GL renderer), the GL renderer leaps ahead in terms of performance at nearly every resolution.

Crash Bandicoot

Crash Bandicoot is a game that ran at a resolution of 512×240.

| Resolution | Performance (with OpenGL) [with PGXP] | Performance (with OpenGL) [w/o PGXP] | Performance (with Vulkan) [with PGXP] | Performance (with Vulkan) [w/o PGXP] | Performance (software OpenGL) | Performance (software Vulkan) |

|---|---|---|---|---|---|---|

| 8192×3840 [16x] [for 5K] | 188.8fps | 266fps | 217fps | 239fps | 4.4fps | 5.3fps |

| 4096×1920 [8x] [for 2K] | 216fps | 296fps | 218fps | 240fps | 16fps | 17.5fps |

| 2048×960 [4x] | 215fps | 296fps | 216fps | 239fps | 52fps | 57.9fps |

| 1024×480 [2x] | 216fps | 296fps | 216fps | 239fps | 138fps | 145fps |

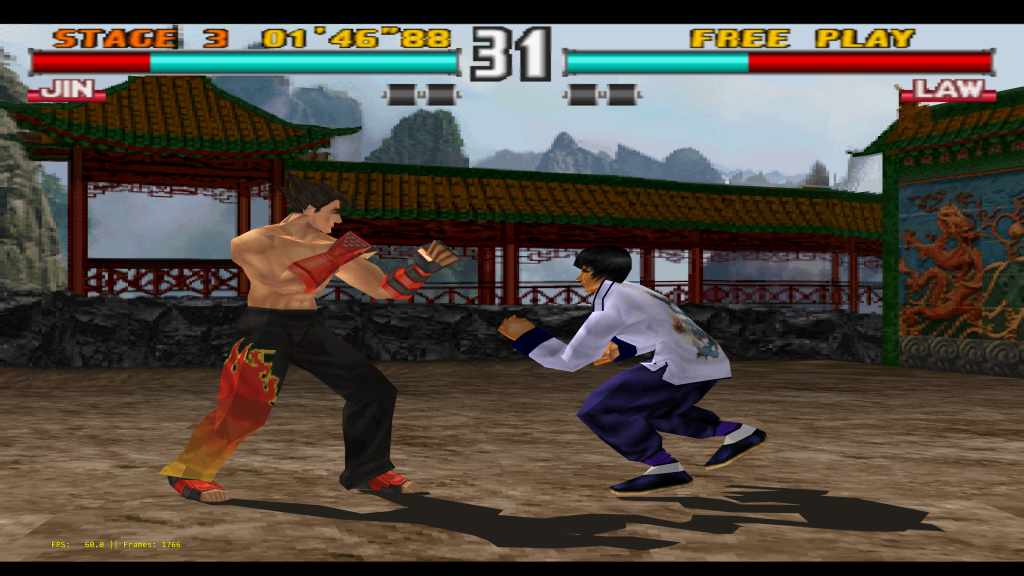

Tekken 3

Tekken 3 is a game that ran at a resolution of 368×480.

| Resolution | Performance (with OpenGL) [with PGXP] | Performance (with OpenGL) [w/o PGXP] | Performance (with Vulkan) [with PGXP] | Performance (with Vulkan) [w/o PGXP] | Performance (software OpenGL) | Performance (software Vulkan) |

|---|---|---|---|---|---|---|

| 11776×15360 [32x] [for 12K] | N/A | N/A | 127fps | 127.4fps | N/A | N/A |

| 5888×7680 [16x] [for 4K] | 188.5fps | 266fps | 184.4fps | 211fps | 4.4fps | 6.6fps |

| 2944×3840 [8x] [for 2K] | 186.5fps | 208fps | 183.5fps | 269fps | 22fps | 25.2fps |

| 1472×1920 [4x] | 184.5fps | 270fps | 230.5fps | 210fps | 52fps | 59.4fps |

| 1024×480 [2x] | 232fps | 271fps | 185.5fps | 210fps | 129fps | 137fps |

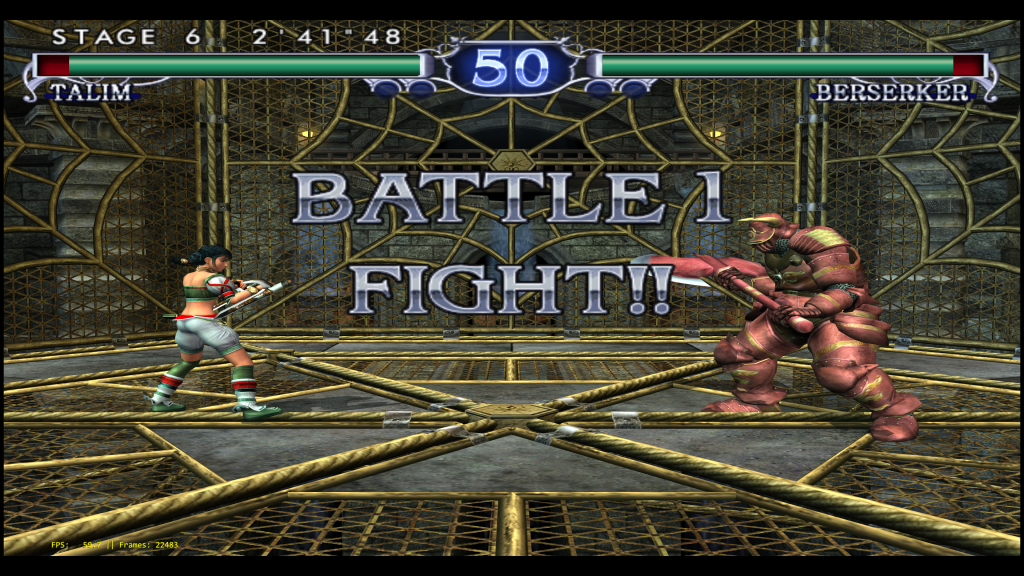

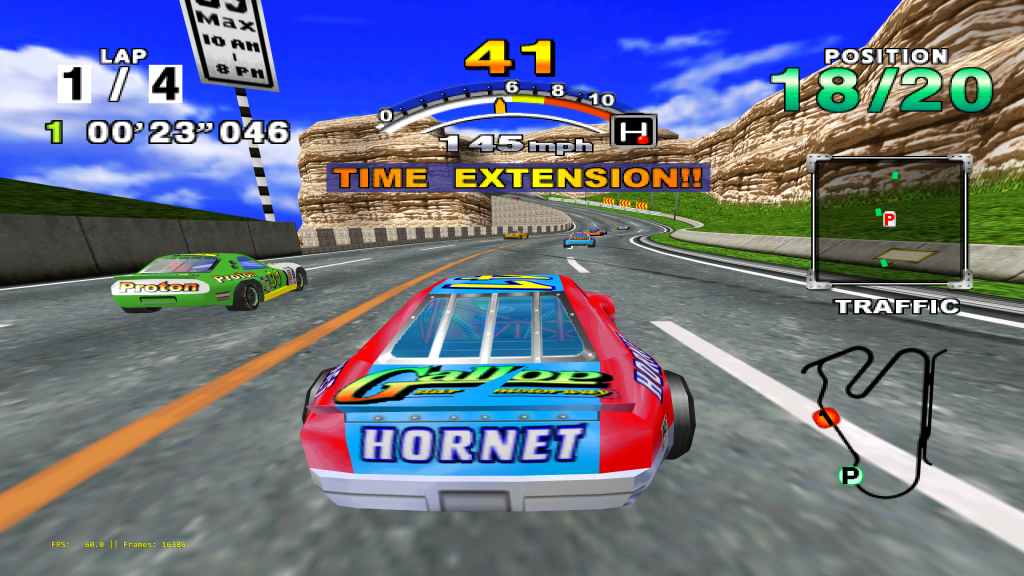

Reicast

Dead or Alive 2

| Description | Performance |

|---|---|

| 4480×3360 | 206fps |

| 5120×3840 | 206fps |

| 5760×4320 | 206fps |

| 6400×4800 | 204fps |

| 7040×5280 | 206fps |

| 7680×5760 | 206fps |

| 8320×6240 | 204fps |

| 8960×6720 | 204fps |

| 9600×7200 | 207fps |

| 10240×7680 | 206fps |

| 10880×8160 | 207fps |

| 11520×8640 | 207fps |

| 12160×9120 | 194fps |

| 12800×9600 | 193fps |

As you can see, it isn’t until we reach 12160×9120 that Reicast’s performance finally lets up from an almost consistent 206/207fps to a somewhat lower value. Do note that this was testing the same environment. When alpha effects and RTT (Render to Texture) effects are being applied onscreen, there may well be dips on the higher than 8K resolutions whereas 8K and below would be able to handle it with relative ease.

Mupen64plus – GlideN64 OpenGL renderer

This core uses Mupen64plus as the core emulator plus the GlideN64 OpenGL renderer.

Super Mario 64

| Description | Performance |

|---|---|

| 3840×2880 – no MSAA | 617fps |

| 3840×2880 – 2x/4x MSAA | 181fps |

| 4160×3120 – no MSAA | 568fps |

| 4160×3120 – 2x/4x MSAA | 112fps |

| 4480×3360 – no MSAA | 538fps |

| 4480×3360 – 2x/4x MSAA | 103fps |

| 4800×3600 – no MSAA | 524fps |

| 4800×3600 – 2x/4x MSAA | 94fps |

| 5120×3840 – no MSAA | 486fps |

| 5120×3840 – 2x/4x MSAA | 82fps |

| 5440×4080 – no MSAA | 199fps |

| 5440×4080 – 2x/4x MSAA | 80fps |

| 5760×4320 – no MSAA | 194fs |

| 5760×4320 – 2x/4x MSAA | 74fps |

| 6080×4560 – no MSAA | 190fps |

| 6080×4560 – 2x/4x MSAA | 68fps |

| 6400×4800 – no MSAA | 186fps |

| 6400×4800 – 2x/4x MSAA | 61.3fps |

| 7680×4320 – no MSAA | 183fps |

| 7680×4320 – 2x/4x MSAA | 39.4fps |

GoldenEye 007

Tested at the Dam level – beginning

| Description | Performance |

|---|---|

| 3840×2880 – no MSAA | 406fps |

| 3840×2880 – 2x/4x MSAA | 100fps |

| 4160×3120 – no MSAA | 397fps |

| 4160×3120 – 2x/4x MSAA | 65fps |

| 4480×3360 – no MSAA | 375fps |

| 4480×3360 – 2x/4x MSAA | 60fps |

| 4800×3600 – no MSAA | 342fps |

| 4800×3600 – 2x/4x MSAA | 54fps |

| 5120×3840 – no MSAA | 310fps |

| 5120×3840 – 2x/4x MSAA | 51fps |

| 5440×4080 – no MSAA | 70fps |

| 5440×4080 – 2x/4x MSAA | 46fps |

| 5760×4320 – no MSAA | 78.9fs |

| 5760×4320 – 2x/4x MSAA | 42fps |

| 6080×4560 – no MSAA | 86fps |

| 6080×4560 – 2x/4x MSAA | 37fps |

| 6400×4800 – no MSAA | 79fps |

| 6400×4800 – 2x/4x MSAA | 27fps |

| 7680×4320 – no MSAA | 79fps |

| 7680×4320 – 2x/4x MSAA | 33.2fps |

Preface: Immediately after going beyond 3840×2880 (the slightly-higher than 4K resolution), we notice that turning on MSAA results in several black solid colored strips being rendered where there should be textures and geometry. Again, we notice that enabling MSAA takes a huge performance hit. It doesn’t matter either if you apply 2 or 4 samples, it is uniformly slow. We also notice several rendering bottlenecks in throughput – as soon as we move from 5120×3840 to 5440×4080 (a relatively minor bump), we go from 310fps to suddenly 70fps – a huge dropoff point. Suffice to say, while you can play with Reicast (Dreamcast emulator) and Dolphin (Gamecube/Wii) at 8K without effort and even have enough headroom to go all the way to 12K, don’t try this anytime soon with Gliden64.

We suspect there are several huge bottlenecks in this renderer that prevent it from reaching higher performance, especially since people on 1060s have also complained about less than stellar performance. That being said, there are certain advantages to Gliden64 vs. Glide64, it emulates certain FBO effects which GLide64 doesn’t. It also is less accurate than Glide64 in other areas, so you have to pick your poison on a per-game basis.

We still believe that the future of N64 emulation relies more on accurate renderers like Parallel RDP which are not riddled with per-game hacks vs. the traditional HLE RDP approach as seen in Gliden64 and Glide64. Nevertheless, people love their internal resolution upscaling, so there will always exist a builtin audience for these renderers, and it’s always nice to be able to have choices.