The ParaLLEl N64 Libretro core has received an update today that adds the brand new paraLLEl-RDP Vulkan renderer to the emulator core.

I implore everybody to read Themaister’s blog post (Reviving and rewriting paraLLEl-RDP – Fast and accurate low-level N64 RDP emulation) for a deep dive into this new renderer.

Requirements

- You need a graphics card that supports the Vulkan graphics API.

- It’s currently only available on Windows and Linux.

- Right now the renderer requires a specific Vulkan extension, called ‘VK_EXT_external_memory_host’. Only Nvidia Linux binary drivers for Vulkan currently doesn’t support this extension. It has been requested but there is no ETA yet on when they will implement this.

What’s new since the old ParaLLEl RDP?

- Completely rewritten from the ground up

- Bit-exact renderer

- Should be pretty much on par with Angrylion accuracy-wise now – none of the issues that plagued the old paraLLEl RDP

- Now emulates the VI (Video Interface) as well

- Basic deinterlacing for interlaced video modes

How to install and set it up

- In RetroArch, go to Online Updater.

- (If you have paraLLEl N64 already installed) – Select ‘Update Installed Cores’. This will update all the cores that you already installed.

- (If you don’t have paraLLEl N64 installed already) – go to ‘Core Updater’, and select ‘Nintendo – Nintendo 64 (paraLLEl N64)’.

- Now start up a game with this core.

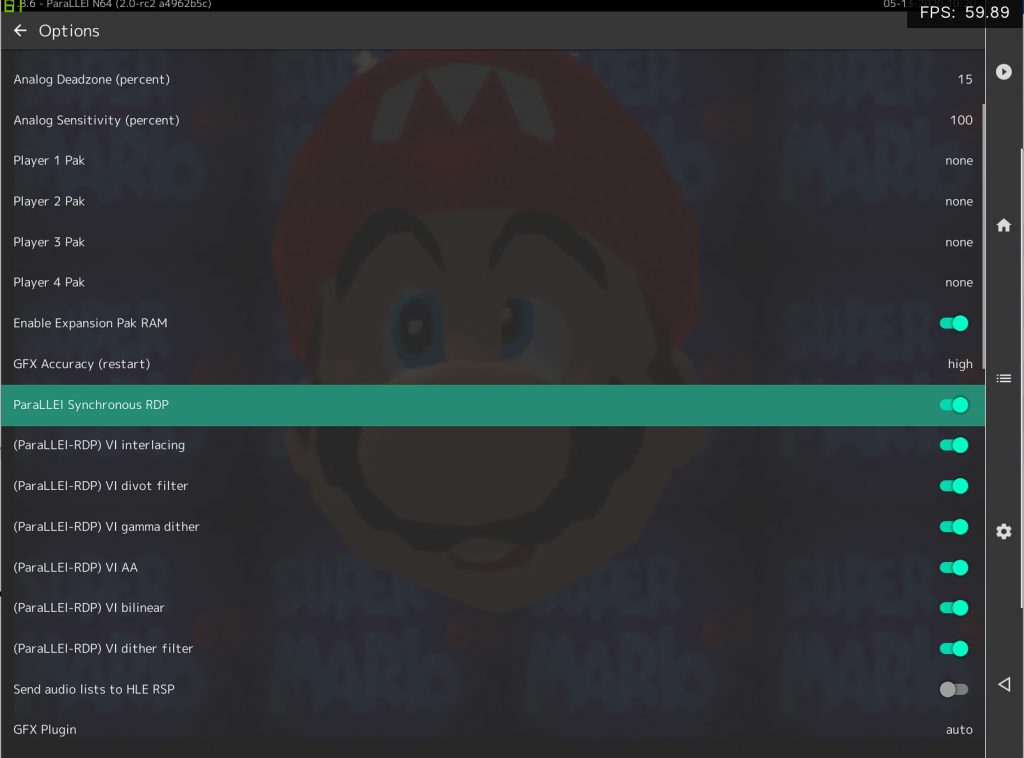

- Go to the Quick Menu and go to ‘Options’. Scroll down the list until you reach ‘GFX Plugin’. Set this to ‘parallel’. Set ‘RSP plugin’ to ‘parallel’ as well.

- For the changes to take effect, we now need to restart the core. You can either close the game or quit RetroArch and start the game up again.

Progress and development in N64 emulation over the past decade

State of HLE emulation

IMHO, this release today represents one of the biggest steps that have been taken so far to elevate Nintendo 64 emulation as a whole. N64 emulation has gotten a bad rep for over decades because of HLE RDP renderers that fail to accurately reproduce every game’s graphics correctly and tons of unemulated RSP microcode, but it’s gotten significantly better over the years. On the HLE front, things have progressed. GLideN64 has made big strides in emulating most of the major significant games, the HLE RSP implementation used by Mupen 64 Plus is starting to emulate most of the major micro codes that developers made for N64 games. So on that front, things have certainly improved. There are also obviously limiting factors on the HLE front. For instance, GLideN64 still requires OpenGL, and renderers for Vulkan and other modern graphics APIs have not been implemented as of this date (although they could be).

State of LLE emulation

So that’s the HLE front. But for the purpose of this blog article, we are mostly concerned here about Low-Level Emulation. Both HLE and LLE N64 emulation are valid approaches, but if we want to reproduce the N64 accurately, we ultimately have to go LLE. So, what is the state of LLE emulation?

For LLE emulation, some of the advancements over the past few years has been a multithreaded version of Angrylion. Angrylion is the most accurate software RDP renderer to date. Its main problem has always been how slow it is. Up until say the mid to late ’10s, desktop PCs just did not have the CPU power to run any game at fullspeed with this renderer. Multithreaded Angrylion has seen Angrylion make some big gains in the performance department previously thought unimaginable.

However, Angrylion as a software renderer can only be taken so far. The fact remains that it is a big bottleneck on the CPU, and you can easily see CPU activity exceeding over 65% on a modern rig with the multithreaded Angrylion renderer. Software rendering is just never going to be a particularly fast way of doing 3D rasterization.

So, back in 2016, the first attempt at making a hardware renderer that can compete with Angrylion was made. It was a big release for us and it marked one of the first pieces of software to be released that was designed exclusively around the then-new Vulkan graphics API. You can read our old blog post here.

It was a valiant first attempt at making a speedy Angrylion port to hardware. Unfortunately, this first version was full of bugs, and it had some big architectural issues that just made further development on it very hard. So it didn’t see much further development for the past few years.

This year, all the stars have aligned. First out of the gates was the resurrection of paraLLEl-RSP, another project by Themaister. Low-level N64 emulation places a big demand on the CPU, and while cxd4’s RSP interpreter is very accurate, to get at least a 2x leap in performance, a dynamic recompiler approach has to be taken. To that end, this year not only was paraLLEl-RSP resurrected, but we moved the dynamic recompiler architecture from LLVM to Lightrec. It’s a bit less performant than LLVM to be sure but it also has some big advantages – LLVM runtime libraries are very hard to embed and integrate for various platforms, while Lightrec doesn’t have these dependency issues. Furthermore, LLVM would take a long time recompiling code blocks, and it would cause big stutters during gameplay (for instance, bringing up the map in Doom 64 for the first time would cause like a 5-second freeze in the gameplay while it was recompiling a code block – obviously not ideal). With Lightrec, all those stutters were more or less gone.

So, Q1 2020. We now have multithreaded Angrylion which leverages the multi-core CPUs of today’s hardware to get better performance results. We have ParaLLEl RSP, a low-level RSP plugin with a dynamic recompiler that gives us a big bump in performance. But one piece of the puzzle is still missing, and it’s perhaps the most significant. Multithreaded Angrylion still is a software renderer and therefore it still massively bottlenecks the CPU. Whether you can spread that load out over multiple cores or not ultimately matters little – CPUs just are not good at doing fast 3D rasterization, a lesson learned by nearly every mid ’90s PC game developer, and why 3D accelerated hardware could not have come sooner.

So, the obvious Next Big Thing in N64 emulation was to get rid of this CPU bottleneck and move Angrylion kicking and screaming to the GPU, and this time avoid all of the issues that plagued the initial paraLLEl RDP prototype.

Where does that leave us?

With a very accurate Angrylion-quality LLE RDP renderer running on the GPU, and a dynarec LLE RSP core, you will be surprised at how accurate Mupen 64 Plus is now. Nearly every commercial game runs now as expected with nearly no graphical issues, the sound is as you’d expect it to be, it looks, runs and functions just like a real N64. And if you’re on a discrete Nvidia or AMD GPU, your GPU activity will be 4% on average, whether it’s a stone-age GPU from the year 2013 like an AMD R9 290x, or an Nvidia Geforce 2080 Ti. Nearly any discrete GPU made from 2013 to 2020 that supports the Vulkan API seems to eat low-level N64 graphics for breakfast. CPU activity also has decreased significantly. With multithreaded Angrylion and Parallel RSP, there would be about 68% CPU activity on my rig. This is brought down to just 7 to 10% using paraLLEl RDP instead of Angrylion. Software rendering on the CPU is just a huge bottleneck no matter which way you slice it.

So for most practical purposes, using the paraLLEl RDP and paraLLEl RSP cores in tandem, the future is now. Accurate N64 emulation is here, it’s no longer slow, and it’s no longer completely CPU bound either. And you can play it on RetroArch right now, right today. We don’t have to wait for a near-accurate representation of an N64, it’s already here with us for all practical gameplay purposes.

How much faster is paraLLEl RDP compared to Angrylion? That is hard to say, and depends on the game you’re running. On average you can expect a 2x speedup. However, notice that at native resolution rendering, any discrete GPU since 2013 eats this workload for breakfast. This means you’re completely CPU bound in terms of performance most of the time. The better your CPU is at single threaded workloads (IPC), the better it will perform. Core count is a less significant factor. I think on my specific rig, it was my CPU that was the weakest link in the chain (a 7700k i7 Intel CPU paired with a 2080 Ti). The GPU matters relatively little, the 2080 Ti was mostly being completely idle during these tests. For that matter, so was an old 2013 AMD card that I would test with the same CPU – GPU activity remained flat at around 4%. As Themaister has indicated in his blog post, this leaves so much room for upscaled resolutions, which is on the roadmap for future versions.

Benchmarks

System specs: CPU – Intel Core i7 7700k | GPU – Geforce RTX 2080 Ti (11GB VRAM, 2018) | 16GB RAM

| Title | Angrylion | ParaLLEl RDP (Synchronous) | ParaLLEl RDP (Asynchronous) |

| 007 GoldenEye | 82fps | 119fps | 133fps |

| Banjo Tooie | 72fps | 132fps | 148fps |

| Doom 64 | 174fps | 282fps | 322fps |

| F-Zero X | 158fps | 370fps | 478fps |

| Hexen | 156fps | 300fps | 360fps |

| Indiana Jones and the Infernal Machine | 61fps | 94fps | 114fps |

| Killer Instinct Gold | ~103fps | ~168fps | ~240fps |

| Legend of Zelda: Majora’s Mask | 122fps | 202fps | 220fps |

| Mario Kart 64 | ~178fps | ~309fps | ~330-350fps |

| Perfect Dark (High-res) | 70fps | 125fps | 130fps |

| Pilotwings 64 | 87fps | 125fps | 144fps |

| Quake | 188fps | 262fps | 300fps |

| Resident Evil 2 | 183fps | 226fps | 383fps (*) |

| Star Wars Episode I: Battle for Naboo | 90fps | 136fps | 178fps |

| Super Mario 64 | 129fps | 204fps | 220fps |

| Vigilante 8 (Low-res) | 63fps | 91fps | 112fps |

| Vigilante 8 (High-res) | ~46-55fps | ~92-99fps | ~119fps |

| World Driver Championship | ~109fps | ~225fps | ~257fps |

* – Has game breaking issues in this mode

System specs: CPU – Intel Core i7 7700k | GPU – AMD Radeon R9 290x (4GB VRAM, 2013) | 16GB RAM

| Title | Angrylion | ParaLLEl RDP (Synchronous) | ParaLLEl RDP (Asynchronous) |

| 007 GoldenEye | 82fps | 119fps | 133fps |

| Banjo Tooie | 72fps | 132fps | 148fps |

| Doom 64 | 174fps | 282fps | 322fps |

| F-Zero X | 158fps | 360fps | 439fps |

| Hexen | 156fps | 288fps | 352fps |

| Indiana Jones and the Infernal Machine | 61fps | 94fps | 114fps |

| Killer Instinct Gold | ~93fps | ~162fps | ~239fps |

| Legend of Zelda: Majora’s Mask | 122fps | 202fps | 220fps |

| Mario Kart 64 | ~157fps | ~274fps | ~292fps |

| Perfect Dark (High-res) | 70fps | 125fps | 130fps |

| Pilotwings 64 | 87fps | 125fps | 144fps |

| Quake | 189fps | 262fps | 326fps |

| Resident Evil 2 | 156fps | 226fps | 383fps (*) |

| Star Wars Episode I: Battle for Naboo | 90fps | 136fps | 178fps |

| Super Mario 64 | 129fps | 195fps | 209fps |

| Vigilante 8 (Low-res) | 63fps | 91fps | 112fps |

| Vigilante 8 (High-res) | ~46-55fps | ~92-99fps | ~119fps |

| World Driver Championship | ~109fps | ~224fps | ~257fps |

* – Has game breaking issues in this mode

Core option explanations

paraLLEl RDP has some special dedicated options. You can change these by going to Quick Menu and going to Options. Here’s a quick breakdown of what they do –

ParaLLEl Synchronous RDP:

Turning this off allows for higher CPU/GPU parallelism. However, there are certain games that might produce problems if left disabled. An example of such a game is Resident Evil 2.

It has been verified that with the vast majority of games, disabling this can provide for at least a +10fps speedup. Usually the performance difference is much higher though. Try experimenting with it. If you experience no game breaking bugs or visual anomalies, it’s safe to disable this for the game you’re running and enjoy higher performance.

Video Interface Options

ParaLLEl-RDP emulates the N64 RDP’s VI module. This applied plenty of postprocessing to the final output image to further smooth out the picture. Some of the options down below allow you to enable/disable some of these VI settings on the fly. Disabling some of these and enabling some others could be beneficial if you want to use several frontend shaders on top, since disabling some of these postprocessing effects could result in a radically different output image.

(ParaLLEl-RDP) VI Interlacing Disabling this will disable the VI serration bits used for interlaced video modes. Turning this off essentially looks like basic bob deinterlacing, the picture might become shaky as a result when leaving this off.

(ParaLLEl-RDP) VI Gamma Filter Disabling this will disable the hardware gamma filter that some games use.

(ParaLLEl-RDP) VI Divot filter Disabling this will disable the median filter which is intended to clean up some glitched pixels coming out of the RDP. Subtle difference in output, but usually seems to apply to shadow blob decals.

(ParaLLEl-RDP) VI AA Disabling this will disable Anti-Aliasing.

(ParaLLEl-RDP) VI Dither Filter The VI’s dither filter is used to make color banding less apparent with 16-bit pixels.

(ParaLLEl-RDP) VI Bilinear VI bilinear is the internal upscaler in the VI. Disabling this is typically a good idea, since it’s typically used to upscale horizontally.

By disabling VI AA and enabling VI Bilinear, the picture output looks just like how Angrylion’s “Unfiltered” mode currently looks like.

FAQ

Will this renderer be ported to OpenGL?

Here is the short answer – no. Not by us, at least. Reasons: OpenGL is an outdated API compared to Vulkan that does not support the features required by Parallel-RDP. GL does not support 8/16bit storage, external memory host, or async compute. If one would be able to make it work, it would only work on the very best GL implementation, where Vulkan is supported anyways, rendering it mostly moot.

Ports to DirectX 12 are similarly not going to be considered by us, others can feel free to do so. One word of warning – even DirectX12 (yes, even Ultimate) is found lacking when it comes to providing the graphics techniques that ParaLLEl RDP is built around. Whoever will take on the endeavor to port this to DX12 or GL 4.5/4.6 will have their work cut out for them.