I needed a break from paraLLEl RDP, and I wanted to give PSX a shot to have an excuse to write a higher level Vulkan renderer backend. The renderer backends in Beetle PSX are quite well abstracted away, so plugging in my own renderer was a trivial task.

The original PlayStation is certainly a massively simpler architecture than N64, especially in the graphics department. After one evening of studying the Rustation renderer by simias and PSX GPU docs, I had a decent idea of how it worked.

Many hardware features of the N64 are non-existent:

- Perspective correctness (no W from GTE)

- Texture filtering

- Sub-pixel precision on vertices (wobbly polygons, wee)

- Mipmapping

- No programmable texture cache

- Depth buffering

- Complex combiners

My goal was to create a very accurate HW renderer which supports internal upscaling. Making anything at native res for PSX is a waste of time as software renderers are basically perfected at this point in Mednafen and more than fast enough due to the simplicity.

Another goal was to improve my experience with 2D heavy games like the Square RPGs which heavily mix 2D elements with 3D. I always had issues with upscaling plugins back in the day as I always had to accept blocky and ugly 2D in order to get crisp 3D. Simply sampling all textures with bilinear is one approach, but it falls completely flat on PSX. Content was not designed with this in mind at all, and you’ll quickly find that tons of artifacts are created when the bilinear filtering tries to filter outside its designated blocks in VRAM.

The final goal is to do all of this without ugly hacks, game specific workarounds or otherwise shitty code. It was excusable in a time where graphics APIs could not cleanly express what emulation authors wanted to express, but now we can. Development of this renderer was a fairly smooth ride, mostly done in spare time over ~2 months.

Credits

This renderer would not exist without the excellent Mednafen emulator and Rustation GL renderer.

Tested hardware/drivers

- nVidia Linux/Windows 375.xx+ (works fully)

- AMDGPU-PRO 16.30 Linux (works fully)

- Mesa Intel (Ivy Bridge half-way working, Broadwell+, fully working, you’ll want to build from Git to get some important bug fixes which were uncovered by this renderer :D)

- Mesa Radeon RADV (fully working, you’ll want to build from Git to get support for input attachments)

– But, but, I don’t have a Vulkan-capable GPU

Well, read on anyways, some of this work will benefit the GL renderer as well.

– But, but, you’re stupid, you should do this in GL 1.1 and hack it until it works

No 🙂

– Fine, but clearly this is just for shits and giggles

Doing it for the lulz is always a valid reason.

Source

The source will be merged upstream to Github immediately.

PSX GPU overview

The PSX GPU is a very simple and dumb triangle rasterizer with some tricks.

VRAM

The PSX has a 1024×512 VRAM at 16bpp, giving us 1MB of VRAM to work with. Interestingly enough, this VRAM is actually organized as a 2D grid, and not a flat array with width/height/stride. This certainly simplifies things a lot as we can now represent the VRAM as a texture instead of shuffling data in and out of SSBOs.

Unlike N64, the CPU doesn’t have direct access to this VRAM (phew), so access is mediated by various commands.

Textures

The PSX can sample textures at 4-bit palettes, 8-bit palettes or straight ABGR1555, very neat and simple. Texture coordinates are confined to a texture window, which is basically an elaborate way to implement texture repeats. Textures are sampled directly from VRAM, but there is a small texture cache. For purposes of emulation, this cache is ignored (except for one particular case which we’ll get to …).

An annoying feature is that the color “0x0000” on PSX is always transparent, so all fragment shaders which sample textures might have to discard, another reason to be careful with bilinear.

Shading options

PSX just has 3 shading options, which makes our life very simple:

- Interpolate color from vertices

- Interpolate UV and sample nearest neighbor

- Sample texture multiplied by interpolated color (gouraud shading)

It is practical to not use uber-shading approaches here.

Semi-transparency

PSX has a weird way of dealing with transparency. There is no real alpha channel to speak of, we only have one bit, so what PSX does is set a constant transparency formula, (A + B, 0.5A + 0.5B, B – A, or 0.25A + B). If the high-bit of a texture color is set, transparency is enabled, if not, the fragment is considered opaque. Semi-transparent color-only primitives are simply always transparent.

Mask-bit

Possibly the most difficult feature of the PSX GPU is the mask-bit. The alpha bit in VRAM is considered a “read-only” bit if mask bit testing is enabled and the read-only bit is set. This affects rendering primitives as well as copies from CPU and VRAM-to-VRAM blits.

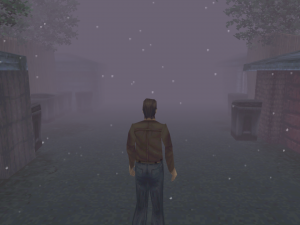

Especially mask-bit emulation + semi-transparency creates a really difficult blending scenario which I haven’t found a way to do correctly with fixed function (but that won’t stop us in Vulkan). Correctly emulating mask-bit lets us render Silent Hill correctly. The trees have transparent quads around them without it.

Intersecting VRAM blits

It is possible, and apparently, well defined on PSX to blit from one part of VRAM to another part where the rects intersect. Reading the Mednafen/Beetle software implementation, we need to kind of emulate the texture cache. Fortunately, this was very doable with compute shaders, although not very efficient.

Implementation details

Feature – Adaptive smoothing

As mentioned, I prefer smooth 2D with crisp-looking 3D. I devised a scheme to do this in post.

The basic idea is to look at our 4x or 8x scaled image, we then mip-map that down to 1x with a box filter. While mip-mapping, we analyze the variance within the 4×4 or 8×8 block and stick that in alpha. The assumption here is that if we have nearest-neighbor scaled 2D elements, they typically have a 1:1 pixel correspondency in native resolution, and hence, the variance within the block will be 0. With 3D elements, there will be some kind of variance, either by values which were shaded slightly differently, or more dramatically, a geometry edge. We now compute an R8_UNORM “bias-mask” texture at 1x scale, which is 0.0 where we estimate we have 3D elements, and 1.0 where we estimate we have 2D. To avoid sharp transitions in LOD, the bias-mask is then blurred slightly with a 3×3 gaussian kernel (might be a better non-linear filter here for all I know).

On final scanout we simply sample the bias-mask, multiply that by log2(scale) and use that as an explicit lod in textureLod() with trilinear sampling, and magically 2D elements look smooth without compromising the 3D sharpness. Sure, it’s not perfect, but I’m quite happy with the result.

Consider this scene from FF IX. While some will prefer this look (it’s toggleable), I’m not a big fan of blocky nearest-neighbor backgrounds together with high-res models.

With adaptive smoothing, we can smooth out the background and speech bubble back to native resolution where they belong. You may notice that the shadow under Vivi is sharp, because the shadow which modulates the background is not 1:1. This is the downside of doing it in post certainly, but it’s hard to notice unless you’re really looking.

The bias mask texture looks like this after the blur:

Potential further ideas here would be to use the bias-mask as a lerp between xBR-style upscalers if we wanted to actually make the GPU not fall asleep.

There is nothing inherently Vulkan specific about this method, so it will possibly arrive in the GL backend at some point as well. It can probably be used with N64 as well.

Obviously, for 24-bpp display modes (used for FMVs), the output is always in native resolution.

GPU dump player

Just like the N64 RDP, having an offline dump player for debugging, playback and analysis is invaluable, so the first thing I did was to create a basic dump format which captures PSX GPU commands and plays them back. This is also nice for benchmarking as any half-capable GPU will be bottlenecked on CPU.

PGXP support

Supporting PGXP for sub-pixel precision and perspective correctness was trivial as all the work happens outside the renderer abstraction to begin with. I just had to pass down W to the vertex shader.

Mask bit emulation

Mask bit emulation without transparency is quite trivial. When rendering, we just use fixed function blending, src = INV_DST_ALPHA, dst = DST_ALPHA.

With semi-transparency things get weird. To solve this, I made use of Vulkan’s subpass self-dependency feature which allows us to read the pixel of the framebuffer which enables programmable blending. Now, mask-bit emulation becomes trivial. This feature is a standard way of doing the equivalent of GL_ARB_texture_barrier, GL_EXT_framebuffer_fetch and all the million extensions which implement the same thing in GL/GLES. For mask-bit in copies and blits, this is done in compute, so implementing mask bit here is trivial.

Copies/Blits

Copies in VRAM are all implemented in compute. The main reason for this is mask bit emulation becomes trivial, plus that we can now overlap GPU execution of rendering and blits if they don’t intersect each other in VRAM. It is also much easier to batch up these blits with compute, whereas doing it in fragment adds some restrictions as we would need to potentially create many tiny render passes to blit small regions one by one, and we need blending to implement masked blits, which places some restrictions on which formats we can use.

When blitting blocks which came from rendered output, the implementation blits the high-res data instead. This improves visual quality in many cases.

Being careful here made the FF8 battle swirl work for me, finally. I’ve never seen that work properly in HW plugins before 🙂 PGXP is enabled here with perspective correctness as well.

Intersecting VRAM blits

For intersected VRAM blits, I dispatch one 128-thread compute group which basically implements the C++ variant as-is. It emulates the texture cache by reading in data from VRAM into registers, barrier(), then writing out. This then loops through the blit region. It’s fairly rare, but this case does trigger in surprising places, so I figured I better do it as accurate as I could.

The Framebuffer Atlas – Hazard tracking

The entire VRAM is one shared texture where we do all our rendering, scanout, blits, texture sampling and so on. I needed a system where I could track hazards like sampling from a VRAM region that has been rendered to, and deal with changing resolutions where crazy scenarios like CPU blitting raw pixels over a framebuffer region which was rendered in high resolution. Vulkan allows us to go crazy with simultaneous use of textures (VK_IMAGE_LAYOUT_GENERAL is a must here), as long as we deal with sync ourselves.

In order to support high-res rendering and sampling from textures, I needed to deal with two variants of VRAM:

- One RGBA8_UNORM texture at 1024x512xSCALE, rationale for RGBA8 is mandated support for image load/store, it has alpha (for mask bit) and good enough bit-depth. This texture also has log2(scale) + 1 mip-levels, so we can do the mip-mapping step of adaptive smoothing in place.

- One R32_UINT texture at 1024×512. I actually wanted R16_UINT, but this is not mandated for image load/store. Here we store the “raw” bit pattern of VRAM, which makes paletted texture reads way cheaper than having to pack/unpack from UNORM.

I split the VRAM into 8×8 blocks. All hazards and dependencies are tracked at this level. I chose 8×8 simply because it fits neatly into 64 threads on blits (wavefront size on AMD), and the smallest texture window is 8 pixels large, nice little coincidence 🙂

Each block has 2 bits allocated to it to track domain ownership:

- Block is only valid in UNSCALED domain.

- Block is valid in both UNSCALED and SCALED, but prefer SCALED.

- Block is valid in both UNSCALED and SCALED, but prefer UNSCALED.

- Block only valid in SCALED domain.

Whenever I need to read or write VRAM in a particular domain, I need to check the atlas to see if the domain is out-of-sync. If it is, I will inject compute workgroups which “resolve” one domain to the other.

If the access is a “write” access, the block will be set to “UNSCALED only” or “SCALED only” so that if anyone tries to access the block in a different domain, they will have to resolve the block first.

To resolve SCALED to UNSCALED, a simple box-filter is used. In effect, at 4x scale we get 16x supersampling, or 64x SSAA at 8x scale 😀 To resolve UNSCALED to SCALED, nearest neighbor is used. The rationale for doing it this way is that resolving up and down in scale is a stable process. Using nearest neighbor for up-resolves also works excellent with adaptive smoothing since we will get a smoothed version of the block which was resolved from UNSCALED and wasn’t overwritten by SCALED later.

Another cool thing is that I use R32_UINT in the UNSCALED domain, so I actually pack in 10-bit color on resolve. Regular ABGR1555 goes in the lower 16-bits, but the upper 16 bits are used to hide “hidden” precision bits, which are used if the texture is ever read as straight ABGR1555. This greatly improves image quality on any framebuffer effects without having to resolve with dithering and keeps palette reads efficient.

One very interesting bug I had at some point was that the Silent Hill intro screen wouldn’t fade out, it turns out that it samples the previous frame with a feedback factor of ~0.998! We have to be very careful here with rounding, the problem I had was that at slightly darker tones round(unorm_color * 0.998) == unorm_color, so just a smear instead of fade out, especially since the main framebuffer was just 5-bit per color … The fix here was to try to mimic rounding modes closer to what PSX does on 8-bit -> 5-bit, simply chopping away LSBs, now it looked correct. Using 10-bpp resolves improved things a bit more.

Now, even though I can sync between domains, I still need to track write-after-write, read-after-write and write-after-read hazards within a domain. Whenever a GPU command reads or writes from an 8×8 block, I check to see if there are any pipeline stages which have also accessed the pipeline stage in a way which is a hazard. E.g. a write will need a pipeline barrier if there are any readers or writes, but a reader only needs to check if there are writers. If such a hazard is detected, a callback is signalled with which pipeline stages need to participate in a vkCmdPipelineBarrier and which caches need to be flushed/invalidated on the GPU. The bits are then cleared. Overall, I make due with 16 bits per block, which is very compact.

While this scheme is fine for blits and copies and whatnot, renderpasses are handled a bit special, instead of checking the atlas for every primitive, the bounding box of the render pass itself is considered instead. Only when the bounding box of the renderpass increases does it damage the atlas and resolve any hazards which may arise.

Render passes are always done in-order, so hazards between render passes are ignored in the atlas for simplicity. Overall, the performance of this approach turned out to be great, and seems to be very accurate for the content I’ve tested.

The atlas implementation is API agnostic, so hopefully this should fix some bugs in the GL renderer as well if integrated.

Render pass batching

PSX renders one primitive at a time, so it is quite obvious that we need to aggressively batch primitives. There is another side here which is important to consider, for tiled-based renderers on mobile, each render pass has a very significant cost in that beginning/ending the render pass needs to read-in/write-out all memory associated in the render area, which is a quite large drain on performance. PSX games tend to make it difficult for us as clear rects come in, scissor boxes change and we need to batch as aggressively as we can here. Not all games use clear rects, so using loadOp = CLEAR isn’t always an option.

The approach I took is very similar to the Rustation renderer, but with some extra considerations for Vulkan render passes.

As a primitive comes in, it is placed in one or more queues:

- Opaque non-textured primitives

- Opaque textured primitives

- Semi-transparent textured primitives

- Semi-transparent primitives (including textured) and primitives which use mask bit

The screen space bounding box is computed for this primitive. Using this information we can figure out if the primitive is “scissor invariant”, i.e. the scissor box cannot clip any pixel in the primitive. If this is the case, we can say the primitive belongs to scissor instance -1. If the primitive can be clipped, we assign the primitive a scissor index. The scissor index increases whenever set_draw_area() changes the scissor box. From here, we enter the atlas to see if the union of the scissored bounding box and existing render pass area increases, if it does, we need to check for hazards to avoid any synchronization issues. If this happens, we flush out the render pass, synchronize and start a new render pass.

For clear rects, we similarly expand the render area as needed. If the current render area == clear rect area, this becomes a clear op candidate. If the render pass is later flushed with this particular area, we know we can use loadOp = CLEAR and save lots of readbacks on tiled GPUs, yay.

When the render pass is flushed, we render out our queues in a particular order. While the PSX GPU does not have depth buffers, it doesn’t mean we cannot use depth buffering ourselves to sort primitives in a more favorable order.

Opaque primitives are sorted by scissor index, and then front-to-back. These are rendered first. Then, we consider the semi-transparent textured primitives, these are conditionally semi-transparent. Just like Rustation, we render the primitives as if they were opaque, and discard the fragments if they are indeed opaque. If they are opaque, we end up writing the primitives Z to the depth buffer, serving as a mask (depth test = LESS) when we later redraw these primitives again a second time.

Now that we have sorted out the opaque pixels, we render the semi-transparent primitives in-order, batching up as many primitives as we can depending on the VkPipeline they need to use. While some crazy reordering can be done here if primitives don’t overlap, I doubt it’s worth it.

Primitives which use mask bit and semi-transparency are always drawn alone, because we need to perform a by-region vkCmdPipelineBarrier(COLOR_ATTACHMENT -> INPUT_ATTACHMENT) to safely read the framebuffer. This is quite expensive on IMR GPUs, but performance is just fine in the prime example of this PSX feature, which is Silent Hill. On tile based GPUs, this is basically free though, so that will be interesting to test in the future. 🙂

An important case where having a tight bounding box on our draw area is the MGS codec, generated from a frame trace in rsx-player:

The “bloom” effect is done by rendering the codec text to the lower left, then blend it with offsets on top, effectively creating a gauss kernel (!?) If we used the draw rect naively as the bounding box, we would create 13-15 render passes just to draw this thing as the hazard tracker would think that we rendered to a framebuffer while also trying to sample from it at the same time, one render pass for each blend step.

Line rendering

Line rendering is always a PITA. PSX has a very particular rasterization pattern which games sometimes rely on to draw primitives correctly, you may have noticed the one pixel that was wrong in the video above … ye, it’s using lines, go figure. The current implementation generates a quad which tries its best to approximate wide lines to match the rasterization pattern of PSX, but it’s not quite there yet.

Vulkan higher level API

This time around I wanted an excuse to create a higher level Vulkan API. The Vulkan backend in the RDP is a bit too explicit in hindsight and adding things like VI filtering would require a ton of boilerplate crap to deal with render passes etc … so, this time around I wanted to do it better.

I’m quite happy with the API as a standalone renderer API and I hope to reuse this in the RDP and other side projects when I get back to that.

Reusable PSX renderer implementation

The renderer exposes a C++ API which closely matches the PSX GPU. It should be fairly straight forward to reuse in any other PSX emulator or maybe even used as a renderer for a retro-themed game which tries to mimic the look and feel of early 3D games.

Performance

As you can expect, performance is good. The better desktop GPUs easily render this stuff at 8K resolution if you’re crazy enough to try that. In more modest resolutions, 1000++ FPS is easy, you’re going to be CPU bound in the emulator anyways, might as well crank it as high as it’ll go. The atlas hazard tracking doesn’t seem to appear in my profiles, so I guess it’s fast enough.

Interestingly enough, I was worried that VK_IMAGE_LAYOUT_GENERAL would decimate performance on AMD, but it seems just fine, guess I’m not bandwidth bound. 🙂

Enabling this renderer in Beetle

Make sure you enable the Vulkan backend in RetroArch. Beetle will now try multiple backends until one of them succeeds, the final fallback is software.

Bugs

While I haven’t tested every game there is, I think it’s quite solid already, in far better shape than paraLLEl RDP is at least. The bugs I know of so far are all minor visual glitches which are likely due to either upscaling or slightly off rasterization rules.

Source code repository

The source code repository to Mednafen/Beetle PSX can be found here –

https://github.com/libretro/beetle-psx-libretro